| Name | Student ID |

|---|---|

| Sidharth Vinod | 2400635 |

| Lim Bing Xian | 2401649 |

| Tan Yu Xuan | 2400653 |

| Boo Wai Yee Terry | 2402445 |

| Haris Bin Ahmad Rithaudeen | 2403053 |

- Overview

- Exercise Classes

- Demo

- Project Poster

- Quick Start

- Project Structure

- Dataset

- Model Architectures

- Training Details

- Results

- Future Improvements

- References

This project implements and compares three state-of-the-art video classification architectures for identifying gym exercises from video input. The system can accurately classify five different exercises by analyzing both spatial features (body posture) and temporal dynamics (movement patterns).

Key Features:

- Real-time exercise classification from video

- Comparison of 2D CNN, 3D CNN, and Transformer-based approaches

- Interactive Streamlit web application

- Achieves 94.12% accuracy with VideoMAE model

The classifier recognizes the following five exercises:

- Bicep Curls

- Push Ups

- Squats

- Shoulder Press

- Lateral Raises

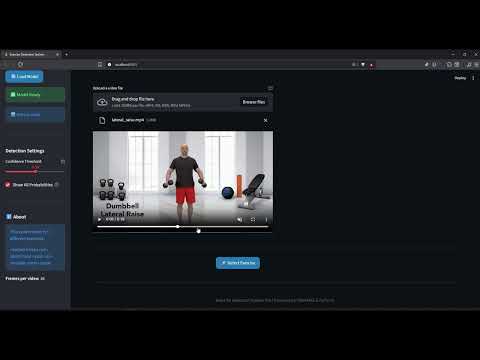

Click the thumbnail below to watch our demo video showcasing the Streamlit application in action:

An intelligent video classification system that identifies 5 different gym exercises using deep learning models trained on video data.

Overview of the AI Video Exercise Classifier project, including model architecture, dataset, and results.

For a high-resolution version of our poster, click to download.

- Python 3.8+

- Git with Git LFS installed

- 8GB+ RAM

- For custom training, we recommend using Google Colab for GPU access.

- PyTorch 2.6.0+cu126 (CUDA 12.6)

-

Clone the repository with Git LFS

git lfs install git clone https://github.com/sidharthvinod24/AAI3001-Project cd AAI3001-Project git lfs pull -

Create a virtual environment

python -m venv env # On Windows env\Scripts\activate # On macOS/Linux source env/bin/activate

-

Install dependencies

pip install -r requirements.txt

-

Launch the Streamlit application

streamlit run main.py

The application will open in your default browser. Upload a video of any supported exercise to get predictions!

To train models from scratch:

- Upload the project folder to Google Drive

- Open

main.ipynbin Google Colab - Select a GPU runtime (Runtime → Change runtime type → GPU)

- Update file paths to match your Google Drive structure

- Run all cells

AAI3001-Project/

├── main.py # Streamlit application

├── main.ipynb # Model training notebook

├── requirements.txt # Python dependencies

├── README.md # Project documentation

├── processed/ # Preprocessed videos (224×224, no audio)

├── pretrained_model/ # Saved model weights

│ ├── videomae/ # VideoMAE model

│ ├── resnet18_model.pth # ResNet18 weights

│ └── pretrained_resnet3d.pth # R3D-18 weights

├── old_exercises/ # Original Kaggle dataset

├── new_exercises/ # Augmented dataset

└── assets/ # Project assets such as poster

Primary Dataset: Kaggle Gym Workout Exercises

- Original dataset containing 20+ exercise types

- Selected 5 exercise classes for this project

Custom Dataset:

- Team-recorded videos to address class imbalance

- Added supplementary examples for minority classes

Preprocessing:

- Resized to 224×224 resolution

- Audio removed

- Format: MP4

VideoMAE is a transformer-based masked autoencoder designed for video understanding. It captures both spatial features (appearance, body posture) and temporal dynamics (motion across frames) in a unified manner.

- Custom Model Definition: Used pretrained VideoMAE [1] and adapted for exercise classification by freezing most encoder layers and training only the last 2 layers. Output layer adjusted to 5 classes.

- Data Augmentation: Resizing, random cropping, horizontal flips, small random rotations, and color jittering.

ResNet18 serves as our 2D convolutional baseline. Average framing is used to aggregate features from sampled frames.

- Custom Model Definition: Pretrained ResNet18 [2] with frozen layers, replaced FC layer with 2 linear layers + ReLU + Dropout, adjusted output to 5 classes.

- Data Augmentation: Random resized crops, horizontal flips, color jitter, grayscale conversion, Gaussian blur.

R3D-18 extends 2D convolutions into the temporal dimension to model motion patterns across frames.

- Custom Model Definition: Pretrained R3D-18 [3] with frozen layers, custom classification head with ReLU and dropout, output adjusted to 5 classes.

- Data Augmentation: Resizing, random cropping, horizontal flips, small rotations, color jitter.

| Parameters | Value |

|---|---|

| Epochs | 5 |

| Optimizer | AdamW (weight decay: 1e-4) |

| Loss Function | CrossEntropyLoss (label smoothing: 0.1) |

| Learning Rate | 5e-5 |

| Scheduler | Cosine with warmup |

| Batch Size | 4 |

| Frames per Video | 16 |

| Training Framework | HuggingFace Trainer API |

| Parameters | Value |

|---|---|

| Epochs | 100 (with early stopping) |

| Optimizer | AdamW (weight decay: 1e-4) |

| Loss Function | CrossEntropyLoss (label smoothing: 0.1) |

| Learning Rate | 1e-4 |

| Scheduler | ReduceLROnPlateau (patience: 5) |

| Batch Size | 8 |

| Frames per Video | 25 |

| Parameters | Value |

|---|---|

| Epochs | 100 (with early stopping) |

| Optimizer | Adam (weight decay: 1e-4) |

| Loss Function | CrossEntropyLoss |

| Learning Rate | 1e-4 |

| Scheduler | ReduceLROnPlateau (patience: 5) |

| Batch Size | 4 |

| Frames per Video | 16 |

| Model | Accuracy | F1-Score | Parameters (trainable) |

|---|---|---|---|

| VideoMAE | 94.12% | 94.14% | ~86M (~5M fine-tuned) |

| R3D-18 | 85.29% | 85.49% | ~33M (~2M fine-tuned) |

| ResNet18 | 82.35% | 82.31% | ~11M (~1M fine-tuned) |

VideoMAE significantly outperforms both CNN-based models due to:

- Rich pretrained representations from masked autoencoding on large-scale video datasets

- Superior temporal modeling through self-attention mechanisms

- Better generalization even with limited training data

R3D-18 improves over ResNet18 by:

- Native temporal feature extraction via 3D convolutions

- Direct motion pattern learning across consecutive frames

ResNet18 provides a solid baseline but:

- Frame averaging loses temporal information

- Limited ability to model motion dynamics

- Increase dataset size.

- Apply advanced data augmentation (e.g., motion warping).

- Use adaptive frame sampling.

License: MIT License. See the LICENSE file for details.

Contact: For questions or collaboration, please open an issue on GitHub.